At Los Altos High School, I took the Advanced Scientific Investigation class where I did research on how to improve the accuracy of Simultaneous Localization and Mapping (SLAM).

Project Description

Simultaneous Localization and Mapping (SLAM) is used to track the position of a moving object, like a robot, and construct a map of its environment. To determine the position of the robot various sensors can be used, like LIDARs, radars, gyroscopes, and cameras. The problem is that no sensor is 100% accurate, so the calculated position will never be fully accurate. To address this issue, I decided to focus my research on improving the accuracy of SLAM by combining data from multiple sensors.

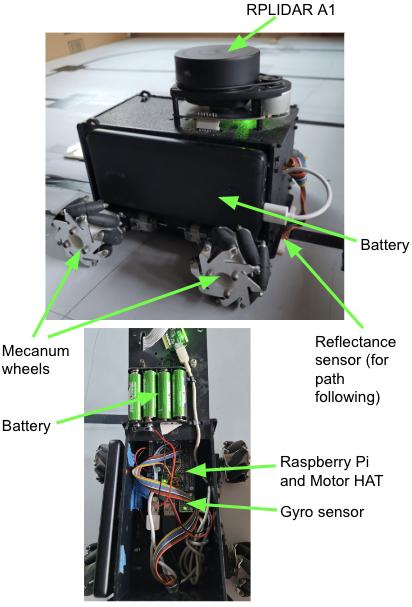

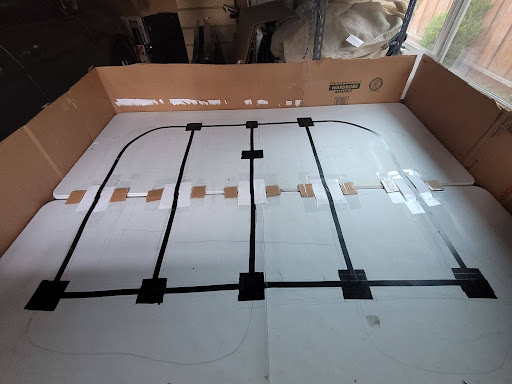

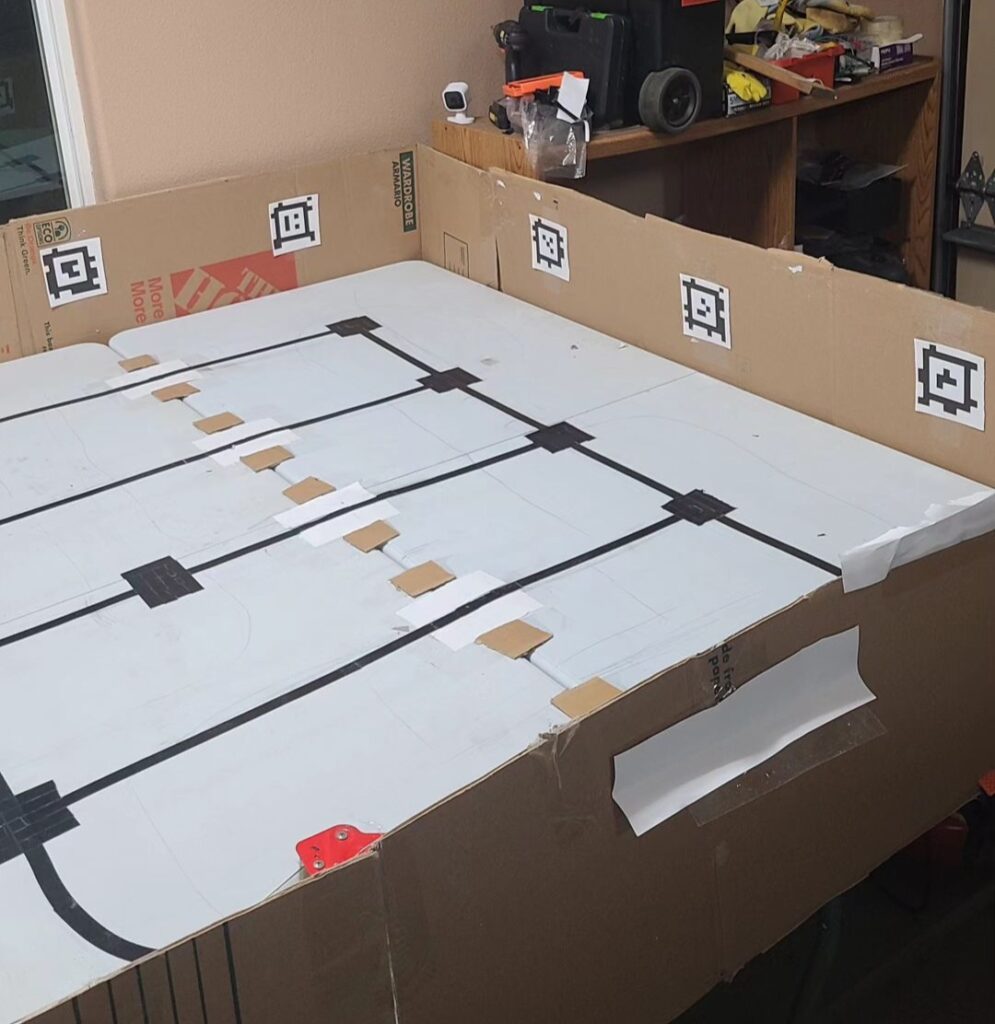

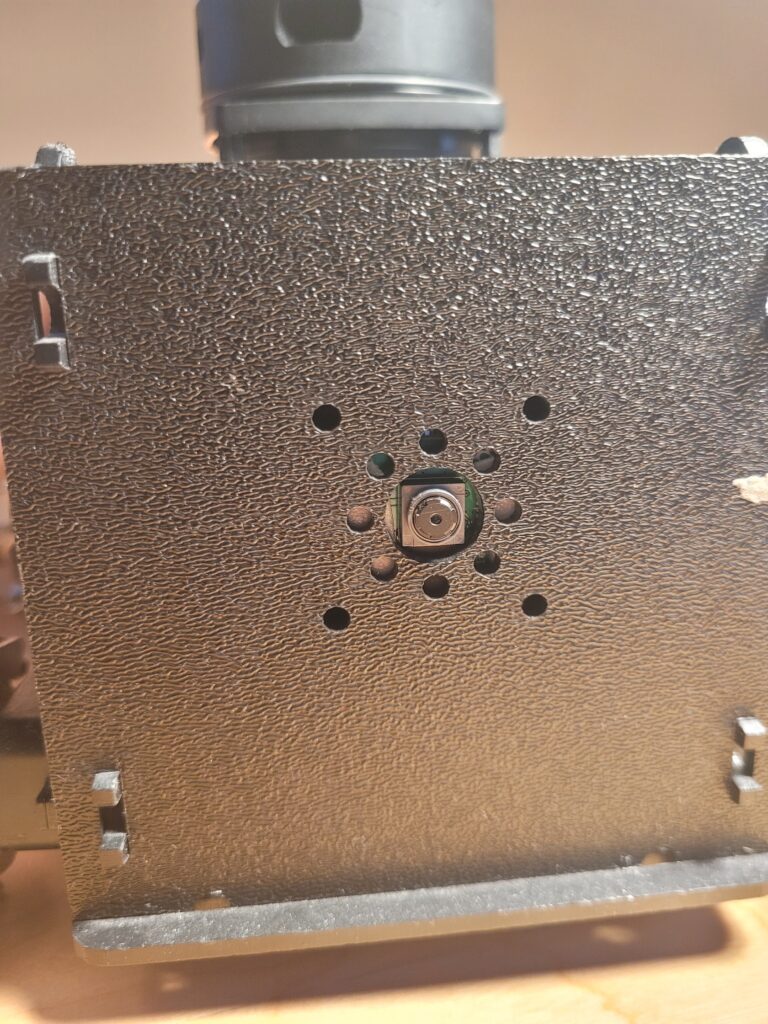

To collect data for my project, I built a robot equipped with a LIDAR, a gyro sensor, and a reflectance sensor. The robot drove on a preprogrammed path on the field and collected data from the sensors. Then, I used this data to calculate the robot’s path. When calculating the path with data from the LIDAR, I used on the Iterative Closest Point (ICP) algorithm to calculate the transformation between subsequent LIDAR reading.

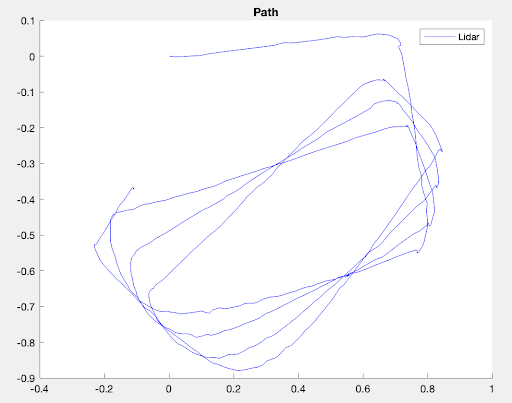

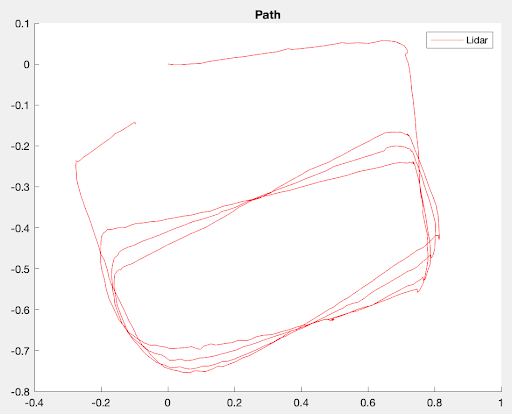

The robot drove on many paths, but I decided to use this path for my research findings because there was a significant difference in the path calculated based on which sensors I used.

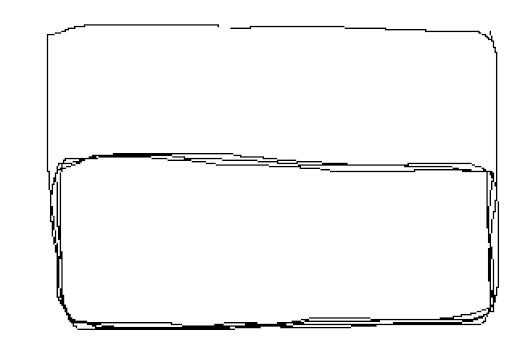

When I used only data from the LIDAR to calculate the robot’s position, the ICP algorithm underestimated the robot’s rotations at sharp turns. These small rotation errors added up and the calculated path was very different from the ground truth. To improve the accuracy of the path, I combined rotation data from the gyro sensor with position data from the LIDAR ICP algorithm. This did result in a small improvement in the calculated path.

To further improve the accuracy of the path, I decided to use AprilTags. AprilTags are 2D barcodes (similar to QR codes) that are used for high-accuracy localization. This is how AprilTag localization works: I put AprilTags on the field and store the locations of the AprilTags on the robot. When the robot’s camera sees an AprilTag, it calculates its position and rotation relative to the AprilTag. Then, using the stored position of the AprilTag, the robot determines its absolute position and rotation on the field.

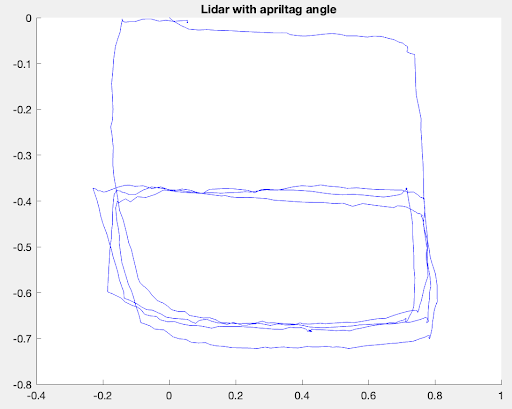

The robot drove on the same path as before and I calculated the path using position data from both the LIDAR ICP algorithm and rotation data from AprilTag detection. The calculated path was very accurate and closely matched the ground truth.

Overall, we can see that when I used data only from the LIDAR ICP algorithm to calculate the path, the path was very inaccurate as the ICP algorithm wasn’t able to accurately determine the rotation at sharp turns. When I combined data from the LIDAR ICP algorithm with data from the gyro, the accuracy of the path improved, but it still wasn’t perfect. When I combined data from the LIDAR ICP algorithm with data from AprilTag detection, the path was very accurate and closely matched the ground truth. Hence, I demonstrated that by combining data from multiple sensors the accuracy of SLAM can be improved.

Awards

Project Resources

Project documents:

Project source code: